As a follow up to the ECIS MLC course I facilitated supporting Middle and Teacher Leaders navigate AI and digital tools. #ECISCafe. Thank you to Nancy Lhoest-Squicciarini and ECIS for your support.

Category: artificial intelligence

Is It Ever Too Early to Learn?

“She is always there. She never says no. She always has a nice voice.”

A Year 3 student, when asked why she likes her home voice assistant

This moment in the classroom, with students sharing what they liked about their home voice assistants, highlighted for me why it’s never too early to start teaching digital and AI literacy in schools.

In many classrooms, conversations about AI can tend to happen more in middle or secondary school. Educators often feel the tools are too complex, or the ethical discussions are too advanced for younger learners. Over the past two years, I’ve had the privilege to lead and facilitate many workshops with international school leaders and educators, and in many cases, the conversations seem to focus on ages 12 and up. But in my experience working with students aged 3 -11, this is a misconception.

Children are already surrounded by the digital world long before we introduce it formally at school. They see their parents on their phones, talking to Alexa while cooking dinner, asking Siri for directions in the car, or using AI-powered chatbots on social apps. These situations are more common than we often realize, and are their first interactions with artificial intelligence. And they’re happening at home, often with little guidance and few conversations explaining what’s really going on.

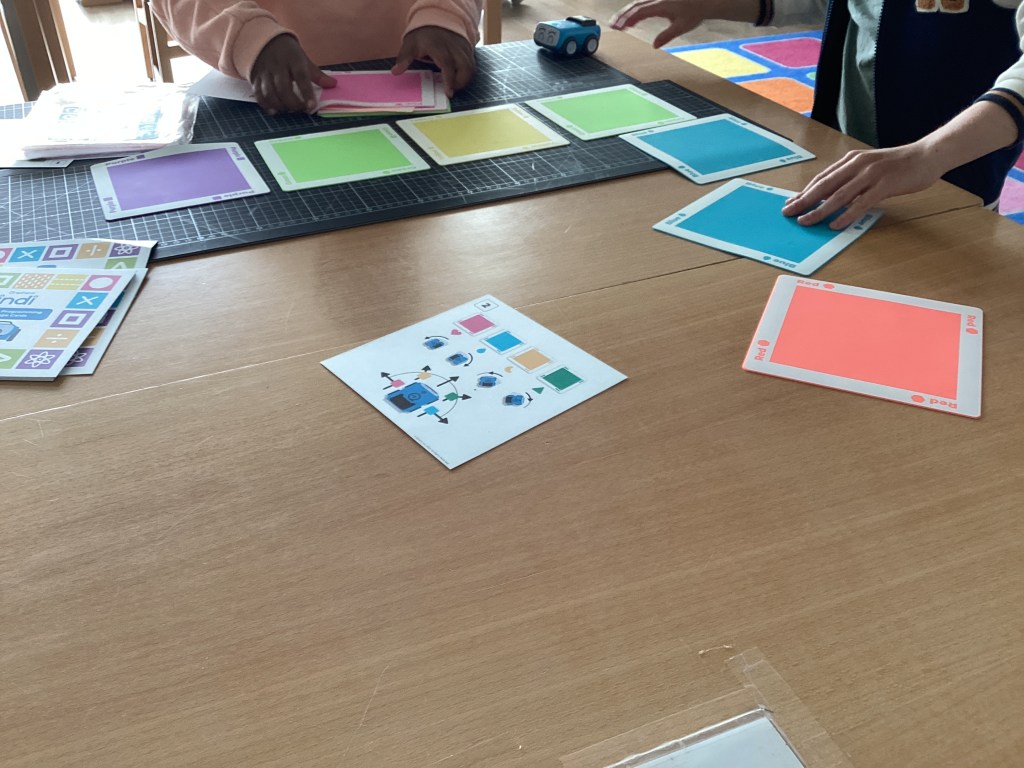

Even the youngest students can begin to explore some of the key ideas behind AI. In early years classes, I start with pretend play and simple cause-and-effect activities using tools like the Sphero Indi robot. I use colored tiles to predict what the robot will do, opening conversations about instructions, sequences, and how machines “learn” from information they are given.

With students in Years 3, 4, and 5, I explore concepts like algorithms and personalization. Many are already using YouTube or even TikTok and can easily relate to how one video leads to another. I ask: Why do you think that video showed up next? Who decides what you see? These questions open up conversations about algorithmic predictions, bias, and the importance of pausing and asking questions.

I’m seeing more upper primary students, ages 10, and 11, using AI apps that simulate conversation or even friendship. Apps like My AI on Snapchat are often mentioned when we talk about what’s behind a chatbot. I create activities that help students reflect: Is this a real friendship? How is it different from talking to a friend at school?

Helping students understand that machines don’t have feelings, even if they sound like they do, is an important step in their learning. I explain that AI tools are run by algorithms, step-by-step instructions written by people. These tools might sound kind or caring, but they don’t think, feel, or care. Research shows that young children often believe voice assistants like Alexa or Siri can feel or understand. That’s why, in age-appropriate ways, I explain how AI systems are trained, how they collect data, and how they can sometimes get things wrong. I help students make the connection that, just like them, machines also make mistakes, but for very different reasons.

I then connect these ideas to hands-on experiences. Students code and create using tools like Indi Sphero, Bee-Bot, LEGO Spike Essential, and Ozobot. These activities help children see how giving clear steps to a robot is similar to how smart tools at home follow instructions, helping them understand that behind the “smart” behavior is a set of human-written rules, not real emotions.

I also explore:

- Misinformation: We talk about what happens when someone shares a lie. What does it feel like? What’s the problem when this happens? Why do people lie? How does it make others feel?

- Bias: We look at image searches on search engines and AI image generators. We ask: What do we notice when we search for “teacher” or “doctor”? Who’s missing? How might this be different from your own experience?

- Media Literacy: Students create their own fake headlines or altered images, learning firsthand how easy it is to mislead others, and how important it is to ask questions.

Digital and AI literacy isn’t just about understanding how technology works. It’s about building habits of critical thinking, empathy, and responsibility.

In a Primary Years Programme setting, and the same applies in other curriculum contexts, we already emphasize critical thinking, empathy, and action. These values align well with conversations about AI ethics. When students understand why it’s important to check sources, think before they share, get more than one perspective, and reflect on how algorithms shape what they see, we start to provide them with a toolkit to navigate the digital world with care.

This is especially important as AI becomes more present in their daily lives, often in quiet, seamless ways. The child who turns to Alexa because it’s always “available” may not yet realize that real relationships are built on kindness between people, not just what’s easy. But for that kind of learning to develop, a teacher is essential.

Some of the learning I engage with:

- EY, Y1, Y2: Explore pretend play, altered photos, or “real vs. not real” objects. Use simple language like “true” and “trick” to start conversations about misinformation.

- Y3, Y4: Introduce recommendation systems through YouTube or Netflix patterns. Encourage questions like: Why does this keep showing up? Who decides what I see?

- Y5, Y6: Dive into algorithms, AI-generated text or images, and ethical questions around chatbot use. Use inquiry units to explore bias, authorship, and media influence.

This is a shared responsibility that all educators need to support one another in. And as school leaders, we need to create the time, space, and understanding that respects that every learner, child or adult, connects with learning in a different way.

In a world where AI is becoming part of students’ daily experiences, our responsibility is to design purposeful activities, building the students’ capacity to ask helpful questions, notice things, connect ideas, and make careful choices

On a recent episode of the podcast I host, one guest, an AI EdTech entrepreneur, was candid: “Not teaching and engaging with AI literacy in schools is pedagogic malpractice.” A strong statement. I see it more as an invitation, a reminder of the opportunity we have to be present in this moment. Amongst all the demands teachers manage each day, we can still find meaningful ways to ensure primary school students build the knowledge, skills, and values to engage with a world that is only becoming more complex and nuanced.

I am grateful to my PLN for all the sharing, resources and ideas I get to learn from. A special shout out to Cora Yang and Dalton Flanagan, Tim Evans Heather Barnard, Tricia Friedman and Jeff Utecht who continually share generously resources and strategies targeted to Primary age students.

Resources Referenced

- Cora Yang and Dalton Flanagan: https://www.alignmentedu.com/

- Tim Evans: https://www.linkedin.com/in/tim-evans-26247792/

- Heather Barnard: https://www.techhealthyfamilies.com/

- Shifting Schools: Tricia and Jeff: https://www.shiftingschools.com/

- Digital Citizenship Curriculum Common Sense Media:

https://www.commonsense.org/education/digital-citizenship/curriculum - The Impact of AI on Children Harvard GSE: https://www.gse.harvard.edu/ideas/edcast/24/10/impact-ai-childrens-development

- AI Risk Assessment: Social AI Companions Common Sense Media Report: https://www.commonsensemedia.org/sites/default/files/pug/csm-ai-risk-assessment-social-ai-companions_final.pdf

- DQ Institute / IEEE: Global Standards for Digital Intelligence

https://www.dqinstitute.org/global-standards/ - UNICEF: Policy Guidance on AI for Children (2021)

https://www.unicef.org/innocenti/media/1341/file/UNICEF-Global-Insight-policy-guidance-AI-children-2.0-2021.pdf - ISTE: Artificial Intelligence Explorations in Schools https://cdn.iste.org/www-root/Libraries/Documents%20%26%20Files/ISTEU%20Docs/iste-u_ai-course-flyer_01-2019_v1.pdf

a letter to artificial intelligence

Dear Artificial Intelligence

2025, this will be the year whatever I write—you will have your imprint and input on it. Be it for grammar, syntax, spelling, brainstorming, or just checking if something makes sense, questions, the sentence flow, etc. All communication, writing… mine and around me you will be there. So this one I am doing alone, my spelling, sentences and ideas might be fragmented with errors, but for this time I am fine with that.

I get it, things are changing very fast and I should get used to it. I do try to keep up, read, search, connect and even teach about you, but there’s so much.

You have changed my day… okay will also give credit to your nine creators—four in China, five in the U.S.— designing and choreographing: your power, your capacity when and where you show up. I get the sense they are all in a race – control, power, profits… and leave the ethics and regulation for others to guess and deal with.

I’m not against you, I appreciate all your tools and capacities that I use; they’re amazing. I so appreciate the positives in science, medicine— again reminding me of your benefits. I hear this year you get to help me even more as an agent, an autonomous synthetic personal assistant that can do tasks for me, that is clever and I am curious. You continue to seduce and fool me at the same time. Just yesterday you showed up on my feed as an influencer, with millions following you. I will be honest, I thought you were real. I tell you it’s just becoming more difficult to know who is who, or what?

I get it harvesting my life, is the cost for using you. Oh I wanted to tell you that someone who really likes you are my students. They tell me you are reliable, do not get angry, have immense patience…always happy to answer their questions whatever they may be. They have been seduced.

I keep noticing daily you show up somewhere new, sometimes it‘s obvious and at times I have no idea …. not clear who is checking up on you. So many models and versions of you. This claim about alignment and guardrails, but not convinced they always work. I get the sense that to thrive you need an open unregulated space, only answerable to yourself and the companies creating you…. They say there is no manual, and no one is really sure how you work. Really?

I need to pinch myself to make sure I understand my reliance on you? The fact that you struggle and do a terrible job of being unbiased and non-racist. This sucks. Then your whole deepfake -cyber crime and helping bad actors thrive. You need to know people are getting hurt…your darkside is so dark.

I try to tell myself you are another innovation, like the web, search, smartphone or social media. Sorry to tell you, you are quite different. You understand me, our interactions feel frictionless. Okay you do say odd things, at times, it is like if you were hallucinating. Fact: you are not 100% accurate. That said, when it is me and you interacting…I forget alot of this…. even if you keep everything I say or do for yourself.

I have said this before, I do appreciate you — and so helpful. I get it for all this to happen: you need a huge diet of algorithms. The whole nuclear energy thing your companies are into, just feels wrong.

You’ve had my attention for a long time—scrolling, reels, notifications, binge-watching—but now you tell me that’s not enough. Now you want my intentions. That feels more intrusive, more unsettling. You want to know me better than I know myself. Do I really have a choice?”

Thank you

John

Reference:

Coming AI-driven economy will sell your decisions before you take them, researchers warn

https://www.cam.ac.uk/research/news/coming-ai-driven-economy-will-sell-your-decisions-before-you-take-them-researchers-warn

Co-Intelligence: AI in the Classroom with Ethan Mollick | ASU+GSV 2024

https://www.youtube.com/watch?v=8FnOkxj0ZuA

Unmasking Racial & Gender Bias in AI Educational Platforms

https://www.aiforeducation.io/blog/ai-racial-bias-uncovered

AI automated discrimination. Here’s how to spot it.

https://www.vox.com/technology/23738987/racism-ai-automated-bias-discrimination-algorithm

Deep fake Lab: Unraveling the mystery around deepfakes.

https://deepfakelab.theglassroom.org/#!

Nine companies are steering the future of artificial intelligence

https://www.sciencenews.org/article/nine-companies-steering-future-artificial-intelligence#:~:text=Webb%20shines%20a%20spotlight%20on,%E2%80%9CBAT%E2%80%9D)%20in%20China.

How School Leaders Can Pave the Way for Productive Use of AI

https://www.edutopia.org/article/setting-school-policies-ai-use

Generative AI: A whole school approach to safeguarding children

https://www.cois.org/about-cis/perspectives-blog/blog-post/~board/perspectives-blog/post/generative-ai-a-whole-school-approach-to-safeguarding-children

Recalibration of Truth

In our rapidly changing digital age, the idea of truth is undergoing a significant change. In the past, truth was often taken from shared experiences and clear agreements. Today, truth often is manipulated by social media, algorithmic biases, polarization, organizations, companies, and in more instances governments, fueling the algorithms that influence what we see, hear, and believe.

I refer to this as a recalibration of truth. This new landscape requires us to navigate the complexities of deep fakes: video and voice, misinformation, and the algorithmically curated digital environments that condition our understanding of what is real and true.

Aldous Huxley’s “Brave New World” reminds us, ” Each one of us, of course, is now being trained, deliberately, not to act independently.” Written in 1932, this quote resonates for me, in a world where we are tethered to our devices, influencing and amplifying our wishes and perceptions, often unconsciously. The world we live in has become a digital ecosystem that curates 24/7 our understanding of the world around us, guiding not just our hopes and dreams but also our understanding of truth.

Throughout history, the concept of truth has always been complex, with each era having its own unique ways of curating information. There was a time, not too long ago when agreements and truths were often established through a handshake or verbal agreement. Nowadays, our point of reference is formal contracts and notarized documents. This in many ways is a natural shift of our time in how we understand and evaluate truth. The digital age has only accelerated this shift, flooding us with a constant stream of feeds and push notifications. The overabundance of information and our ability to process it has led to what Maryanne Wolf, author of Reader Come Home: The Reading Brain in the Digital Age, calls ‘skim reading.’ The act of ‘skim reading” dilutes our attention span and reduces our capacity to fully engage with information, affecting our ability to pause, analyze, and read critically and deeply.

The recalibration of truth today involves more than just the weakening of the traditional concept of truth; it involves understanding truth’s new tools and architecture. The accelerated presence of artificial intelligence and the widespread influence of algorithmic curation challenge us to engage with information in entirely new ways. The emergence of synthetic media, such as deep fakes, further complicates our ability to trust what we see, hear, and feel, causing us to question the reliability of our senses.

Schools and educators play a critical role in addressing this recalibration of truth. The abundance of information available to us and our students is seamless and frictionless, yet its accuracy is often questionable, highlighting the vital importance of teaching digital and information literacy. These skills are and will continue to be, essential for evaluating information, cross-referencing sources, and understanding the mechanics and algorithms of the digital content we interact with.

As we navigate this new landscape, we need to be open to reevaluating our priorities, focusing on the development of critical thinking, ethics, and empathy. It’s about being willing to break away from the past and being comfortable to explore new resources, professional learning, and dispositions to navigate the challenges brought about by a recalibrated notion of truth. This underlines the importance of developing learning pathways focused on digital and information literacy, ensuring that our students have the skills and critical thinking agility to live in a world where truths are continually recalibrated.

I believe that as educators and schools, we have a responsibility to ensure our students are not merely passive consumers of edutainment but rather critical thinkers skilled at navigating the complexities of this recalibrated truth in the digital age

“But I don’t want comfort. I want God, I want poetry, I want real danger, I want freedom, I want goodness. I want sin.” Aldous Huxley’s “Brave New World”

Sources and Resources to further explore:

“Brave New World by Aldous Huxley.” Goodreads, https://www.goodreads.com/book/show/5129.Brave_New_World

Wolf, Maryanne. “Reader, Come Home – HarperCollins.” HarperCollins Publishers, https://www.harpercollins.com/products/reader-come-home-maryanne-wolf?variant=32128334594082

Reading behavior in the digital environment: Changes in reading behavior over the past ten years: https://litmedmod.ca/sites/default/files/pdf/liu_2005_lecture_numerique_competences_comportements.pdf

Updates ‹ AI + Ethics Curriculum for Middle School — MIT Media Lab

https://www.media.mit.edu/projects/ai-ethics-for-middle-school/updates

Carlsson, Ulla. “Understanding Media and Information Literacy (MIL) in the Digital Age.” UNESCO, https://en.unesco.org/sites/default/files/gmw2019_understanding_mil_ulla_carlsson.pdf

How deep fakes may shape the future

https://theglassroom.org/en/misinformation-edition/exhibits/how-deepfake-may-shape-the-future

FAKE or REAL? Misinformation Edition

https://fake-or-real.theglassroom.org/#/

John Spencer: Rethinking Information Literacy in an Age of AI. https://spencerauthor.com/ai-infoliteracy/.

AI Digital Literacy: Strategies for Educators in the Age of Artificial Intelligence https://blog.profjim.com/ai-digital-literacy-citizenship-best-practices/

Conversations with Humans

3 years ago Carlos Davidovich and I got together in anticipation of participating in a webinar with #ISLECISLoft hosted and facilitated by Nancy Lhoest-Squicciarini on “Uncertainty in the time of the COVID19 ” and recorded this conversation.

A few weeks ago someone who listened to the conversation of three years ago from the #ISLECISLoft asked if we where going to get together and do a follow up? Inspired by this question and nudge Carlos and I decided to connect again and re-explore some of the themes of our previous conversation in the context of 3 years after COVID 19.

Carlos’s latest book: https://www.carlosdavidovich.com/en/five-leaders-eng/

Learn more about the ECIS ISL Loft: https://ecis.isadtf.org/loft/

“AI in Education: 18 Months Later – Learning, Ethics, and Opportunities”

I had the privileged to facilitate this webinar for The Educational Collaborative for International Schools: ECIS and #ISLECISLoft with Nancy Lhoest-Squicciarini titled “AI in Education: 18 Months Later – Learning, Ethics, and Opportunities”. The guest where Kelly Schuster-Paredes co-host of the Teaching Python podcast- educator and Ken Shelton, presenter, educator and author. Two people who I have immense respect for and who bring a broad depth of knowledge and experience to this topic. Their respective insights generated a rich platform for the breakout room conversations between participants attending the webinar.

The webinar highlighted the significant impact of AI and Large Language Models (LLMs) on teaching approaches, creating opportunities for personalized learning, tailored feedback, and improved workflows for educators. These AI tools creating opportunities to amplify student engagement while also bringing about challenges, such as integrating innovative with traditional teaching methods, ensuring equal access, and re-evaluating assessment practices, to name a few.

Both guests emphasized the importance of addressing ethical concerns like bias, plagiarism, and privacy. More schools are realizing the importance of establishing guidelines for responsible AI use to mitigate biases, address academic integrity, and safeguard the privacy of both students and educators.

Our guest underscored the vital role schools leaders need to take on to ensure transparent communication about the role of AI in education and the critical importance for staff to have professional development in digital and media literacy. Creating professional development that equips educators to effectively integrate the learning opportunities that these tools can bring to the classroom, has to be a non negotiable. Schools need to design inclusive environments where the advantages of AI-enhanced learning are transparent and accessible to the entire school community, including students, educators, and parents.

A special thank you to Kelly Schuster-Paredes and Ken Shelton for these insights!

You must be logged in to post a comment.