“She is always there. She never says no. She always has a nice voice.”

A Year 3 student, when asked why she likes her home voice assistant

This moment in the classroom, with students sharing what they liked about their home voice assistants, highlighted for me why it’s never too early to start teaching digital and AI literacy in schools.

In many classrooms, conversations about AI can tend to happen more in middle or secondary school. Educators often feel the tools are too complex, or the ethical discussions are too advanced for younger learners. Over the past two years, I’ve had the privilege to lead and facilitate many workshops with international school leaders and educators, and in many cases, the conversations seem to focus on ages 12 and up. But in my experience working with students aged 3 -11, this is a misconception.

Children are already surrounded by the digital world long before we introduce it formally at school. They see their parents on their phones, talking to Alexa while cooking dinner, asking Siri for directions in the car, or using AI-powered chatbots on social apps. These situations are more common than we often realize, and are their first interactions with artificial intelligence. And they’re happening at home, often with little guidance and few conversations explaining what’s really going on.

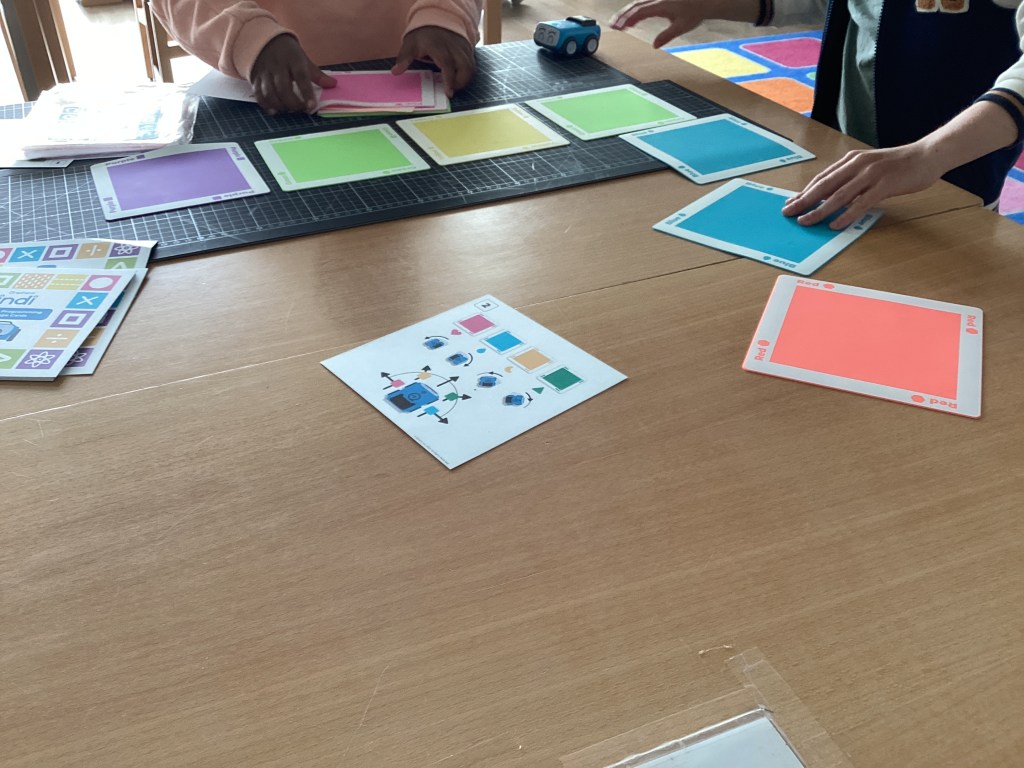

Even the youngest students can begin to explore some of the key ideas behind AI. In early years classes, I start with pretend play and simple cause-and-effect activities using tools like the Sphero Indi robot. I use colored tiles to predict what the robot will do, opening conversations about instructions, sequences, and how machines “learn” from information they are given.

With students in Years 3, 4, and 5, I explore concepts like algorithms and personalization. Many are already using YouTube or even TikTok and can easily relate to how one video leads to another. I ask: Why do you think that video showed up next? Who decides what you see? These questions open up conversations about algorithmic predictions, bias, and the importance of pausing and asking questions.

I’m seeing more upper primary students, ages 10, and 11, using AI apps that simulate conversation or even friendship. Apps like My AI on Snapchat are often mentioned when we talk about what’s behind a chatbot. I create activities that help students reflect: Is this a real friendship? How is it different from talking to a friend at school?

Helping students understand that machines don’t have feelings, even if they sound like they do, is an important step in their learning. I explain that AI tools are run by algorithms, step-by-step instructions written by people. These tools might sound kind or caring, but they don’t think, feel, or care. Research shows that young children often believe voice assistants like Alexa or Siri can feel or understand. That’s why, in age-appropriate ways, I explain how AI systems are trained, how they collect data, and how they can sometimes get things wrong. I help students make the connection that, just like them, machines also make mistakes, but for very different reasons.

I then connect these ideas to hands-on experiences. Students code and create using tools like Indi Sphero, Bee-Bot, LEGO Spike Essential, and Ozobot. These activities help children see how giving clear steps to a robot is similar to how smart tools at home follow instructions, helping them understand that behind the “smart” behavior is a set of human-written rules, not real emotions.

I also explore:

- Misinformation: We talk about what happens when someone shares a lie. What does it feel like? What’s the problem when this happens? Why do people lie? How does it make others feel?

- Bias: We look at image searches on search engines and AI image generators. We ask: What do we notice when we search for “teacher” or “doctor”? Who’s missing? How might this be different from your own experience?

- Media Literacy: Students create their own fake headlines or altered images, learning firsthand how easy it is to mislead others, and how important it is to ask questions.

Digital and AI literacy isn’t just about understanding how technology works. It’s about building habits of critical thinking, empathy, and responsibility.

In a Primary Years Programme setting, and the same applies in other curriculum contexts, we already emphasize critical thinking, empathy, and action. These values align well with conversations about AI ethics. When students understand why it’s important to check sources, think before they share, get more than one perspective, and reflect on how algorithms shape what they see, we start to provide them with a toolkit to navigate the digital world with care.

This is especially important as AI becomes more present in their daily lives, often in quiet, seamless ways. The child who turns to Alexa because it’s always “available” may not yet realize that real relationships are built on kindness between people, not just what’s easy. But for that kind of learning to develop, a teacher is essential.

Some of the learning I engage with:

- EY, Y1, Y2: Explore pretend play, altered photos, or “real vs. not real” objects. Use simple language like “true” and “trick” to start conversations about misinformation.

- Y3, Y4: Introduce recommendation systems through YouTube or Netflix patterns. Encourage questions like: Why does this keep showing up? Who decides what I see?

- Y5, Y6: Dive into algorithms, AI-generated text or images, and ethical questions around chatbot use. Use inquiry units to explore bias, authorship, and media influence.

This is a shared responsibility that all educators need to support one another in. And as school leaders, we need to create the time, space, and understanding that respects that every learner, child or adult, connects with learning in a different way.

In a world where AI is becoming part of students’ daily experiences, our responsibility is to design purposeful activities, building the students’ capacity to ask helpful questions, notice things, connect ideas, and make careful choices

On a recent episode of the podcast I host, one guest, an AI EdTech entrepreneur, was candid: “Not teaching and engaging with AI literacy in schools is pedagogic malpractice.” A strong statement. I see it more as an invitation, a reminder of the opportunity we have to be present in this moment. Amongst all the demands teachers manage each day, we can still find meaningful ways to ensure primary school students build the knowledge, skills, and values to engage with a world that is only becoming more complex and nuanced.

I am grateful to my PLN for all the sharing, resources and ideas I get to learn from. A special shout out to Cora Yang and Dalton Flanagan, Tim Evans Heather Barnard, Tricia Friedman and Jeff Utecht who continually share generously resources and strategies targeted to Primary age students.

Resources Referenced

- Cora Yang and Dalton Flanagan: https://www.alignmentedu.com/

- Tim Evans: https://www.linkedin.com/in/tim-evans-26247792/

- Heather Barnard: https://www.techhealthyfamilies.com/

- Shifting Schools: Tricia and Jeff: https://www.shiftingschools.com/

- Digital Citizenship Curriculum Common Sense Media:

https://www.commonsense.org/education/digital-citizenship/curriculum - The Impact of AI on Children Harvard GSE: https://www.gse.harvard.edu/ideas/edcast/24/10/impact-ai-childrens-development

- AI Risk Assessment: Social AI Companions Common Sense Media Report: https://www.commonsensemedia.org/sites/default/files/pug/csm-ai-risk-assessment-social-ai-companions_final.pdf

- DQ Institute / IEEE: Global Standards for Digital Intelligence

https://www.dqinstitute.org/global-standards/ - UNICEF: Policy Guidance on AI for Children (2021)

https://www.unicef.org/innocenti/media/1341/file/UNICEF-Global-Insight-policy-guidance-AI-children-2.0-2021.pdf - ISTE: Artificial Intelligence Explorations in Schools https://cdn.iste.org/www-root/Libraries/Documents%20%26%20Files/ISTEU%20Docs/iste-u_ai-course-flyer_01-2019_v1.pdf

You must be logged in to post a comment.